Overview

GTag aims to assist visually impaired and blind people in doing grocery shopping independently in an urban context. It consists of a mobile app and third-party NFC tags. The primary function of GTag is to record grocery-related information (e.g., expiration dates, cooking instructions, etc.) while in-store and replay them anytime post grocery shopping.

Timeline

08/2021 - 12/2021

Tools

Figma

ProtoPie

Miro

Adobe Illustrator

Otter.ai

Team

Watson Hartsoe

Tommy Ottolin

Meichen Wei

Qiqi Yang

My Role

UX Designer

UX Researcher

My Role

UX Designer

-

Designed and iterated of digital prototype's user flows, wireframes, prototypes, and audio style guide

-

Suggested NFT technology and the idea of combination of physical tag and digital mobile app

-

Designed the "wizard of oz" methods of using prototypes in user evaluations, and facilitated as a technician in operating the prototype in user evaluations

UX Researcher

-

Developed the user scoping spectrum idea with the help from teammates

-

Made hierarchical task analysis based on contextual inquiries findings

-

Analyzed data and generated findings and design requirements from interviews and contextual inquiries

Problem

In the context of grocery shopping and consuming purchased food products, there are some common problems for people with vision loss.

Obtain information from food packages, especially for expiration dates

Identify foods in the same size and shape

In the grocery shopping context

How might we minimize cognitive load for visually impaired people by making information accessible?

Solution

Whenever no one is around to be your "eyes", Gtag is here to help!

+

=

Write

Users can store desired information on food packaging into designated NFC tags by creating audio recordings. New NFC tags need to be scanned to log in before storing any information.

Read

To access information, users simply scan tags hanging on food packages and listen to them. Tags can be read in or out of the app.

Erase

Information stored in each tag can be deleted.

Edit

Edit existing Information stored in tags.

Research

Comparative Analysis

First, we searched for commonly used mobile-based assistive applications that aid everyday tasks for visually impaired people. This provided us the knowledge on the ecosystem of assistive technologies for our users, which helped us in designing a user-friendly product.

Be My Eyes

A mobile application that utilizes sighted remote assistance operators (volunteers) to assist visually impaired users to see objects and circumstances.

Be My Eyes - https://www.bemyeyes.com/

Seeing AI - https://www.microsoft.com/en-us/ai/seeing-ai

Seeing AI

AI(Artificial Intelligence)-powered mobile app that serves the same purpose as Be My Eyes.

Contextual Inquiries & Interviews

Our Visually Impaired Participants:

01

02

03

04

05

06

All discussions and observations on participants were mainly on their grocery shopping experience. We also asked for participants' perspectives on popular assistive technologies, their medical conditions, daily life, and careers. We collected notes from contextual inquiries and interviews and analyzed them which assisted later research and design.

User Scoping Spectrums

The spectrums are high-level analysis of participants' behaviors collected from contextual inquiries and interviews to find relationships between user behaviors data. Some have stronger relationships compared to others.

The spectrums helped us narrow down the user group to the visually impaired population living in urban contexts. Our product would lie in the current assistive technology ecosystem to promote independent shopping.

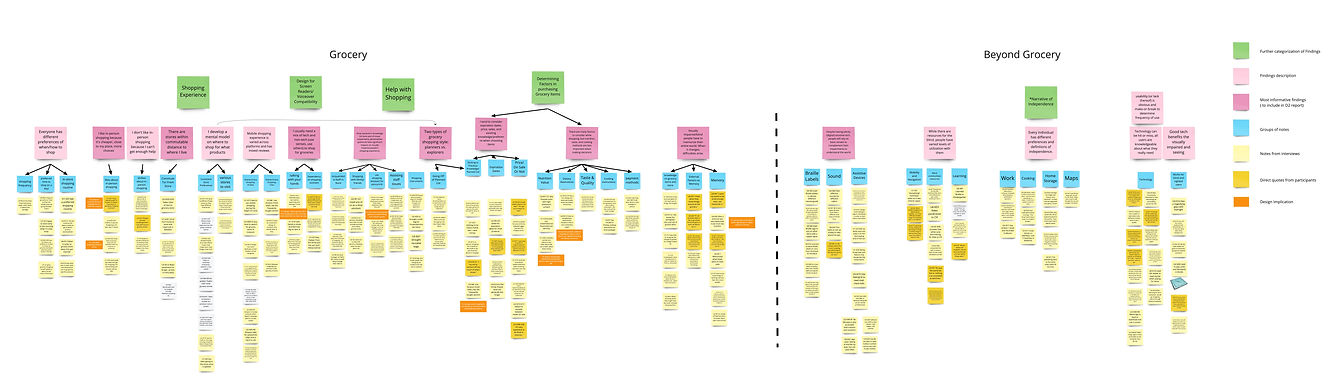

Affinity Map

We gathered notes from contextual inquiries and interviews and arranged them into two groups, grocery shopping and beyond grocery shopping. Then, we separated notes into smaller groups by similar patterns of issues and preferences gave high-level descriptions to each group.

We identified design ideas from some of the notes and darkened colors of some notes to signify importance. Finally, important research findings are synthesized and applied to later product design.

Research Findings

According to observations in contextual inquiries and interviews, we generated research findings from patterns of issues and preferences in grocery shopping.

#1

Planning vs. Exploring Shopping Styles

#4

Use of sensory inputs in grocery shopping

#2

3 most applied factors in selecting grocery items

#5

Visually impaired memorize the entire world

#3

In-person grocery shopping for urban shopper

#6

helper's knowledge and personalities affect experience

Design

Design Requirements

From research findings, we created functional (specific to functions of the product) and non-functional design requirements. We narrowed down to three of the most important and influential design requirements(circled) to be used as guidelines in designing the product.

Functional Requirements:

1. Human

1A. Design empowers visually impaired and blind shoppers to independently shop with helpers

1B. Design supports cognitive recall in store and at home

2. Computer

2A. Design is multimodal, not just visual

2B. Design supports cognitive recall in store and at home

3. Interaction

3A. Design presents information at the point of choosing the product: expiration, sale, and related items

3B. Design presents information at the point of using the product: expiration and cooking instructions

Non-Funtional Requirements:

4. Supportive of Diversity

Design should support and accommodate different shopping styles and allow users to access desired information to make purchase decisions

5. Intuitively Usable

Design should be compatible with the present ecosystem of support which requires minimal cognitive labor in learning and use

6. Collaborative

Design should promote healthy relationships between visually impaired users, shop assistants, and other stakeholders involved in decision-making

7. Trustworthy

Design should assist users in confirming desired items to purchase with certainty and to recall information easily and accurately when they want to

Brainstorming Ideas

We brainstormed together to come up with as many ideas as possible around 4 selected topics related to the design problem. We individually chose 5 ideas from the brainstorming session and sketched them. Finally, we narrowed it down to 10 unique ideas and refined the sketches.

From the 10 ideas, we found that multiple ones can effectively solve a problem or satisfy a need in the entire grocery shopping experience for the visually impaired.

We presented and discussed the ideas with 2 visually impaired participants to ask for their opinions. Finally, we picked the idea that best met the design requirements. The final design is a combination of 2 design sketches and further defined and developed as one product, the GTag.

Idea #5: AI Expiration Date Scanner/Tracker with Audio Cue

Idea #10: Food Information Locator

+

+

Prototyping

Sighted Helper

Haptic

Audio

Smartphone

NFC Technology

How did we decide to use NFC technology for our product?

Our User Group

Visually Impaired People

Satisfy 2 interactions for visually impaired users with commonly use technology for the whole user group

NFC tag scanning for mobile:

Place the upper back of the mobile phone close to the NFC tag. Able to scan without touching the tag within 4cm height range.

There are 3D-printed NFC tag carriers made for attaching tags onto food packages. We have tried other methods like applying adhesive materials to stick onto the packaging, but using carriers and rubber bands is the most suitable way to easily attach and detach NFC tags onto desired items.

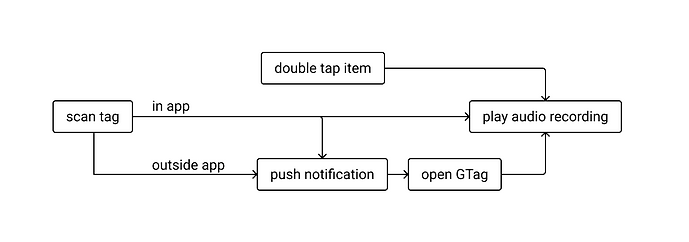

The 4 user flows established for the GTag app were created based on the flow of using NFC technology and customized to our users and the grocery shopping and consuming settings.

Wireframes

When designing the GTag mobile app, we were referring to WAI’s guideline on applying WCAG 2.0 and other guidelines to mobile content (Mobile Accessibility). The visual style guide shows the considerations of accessibility for the GTag app.

Colors

All color used reaches the Enhanced Color Contrast level (Level AAA) of 7:1.

Type

To ensure legibility, Sans Serif typeface Roboto was used.

6 styles of fonts are used to establish visual hierarchy.

We researched how smartphones provide audio feedback to people's actions and applied various audio cues to the app. We also added vibration as haptic feedback in addition to audios.

Tag loaded successfully

Audio Cues (Sound Effect)

Click the button to play the audio!

Start and stop recording

New tag created

Erase tag

Voiceover

- Read content by taping the content once

- Voice to indicate actions

- 1.5 times speaking speed

Tap once:

"Create A New Tag

Double Tab to create"

Normal

The following design guidelines are created to help us meet the design requirements. There are examples to show evidence of applying the design guidelines to design GTag.

Perceivable in diverse ways

Voice- “Ready to Scan”

Sound+vibration- “Bling!” after successfully scan a tag

Click the button below to play the audio!

Support reconfirmation

Accessible interface

Color contrast

Text size

1.2-1.5 times larger

Dictation (speech to text)

Touch target’s size and spacing

Touch target bigger than 9mm*9mm

Inactive space around small size touch targets

Audio outputs

After scan

Click the button below to play the audio!

Requires minimum learning

GTag utilizes pre-existing control schema to help users with simple commands.

- Same actions to scan and load NFC tags on the app

- Tapping once to record and tapping once again to stop build on actions

Evaluation

We conducted a User Evaluation session with a visually impaired shopper and a sighted helper by joining them on a grocery shopping trip. We played different roles in evaluations, which were moderator, notetaker, and wizard (facilitator in performing the prototype).

User Tasks

#1

Write

Helper's Task

Shopper chose the information for the helper to write.

Shopper witnessed the whole process.

#2

Read

Shopper's Task

(VoiceOver On)

Shopper scanned NFC tags written by Helper.

Shopper read pre-recorded information to distinguish items with similar packaging.

#3

Erase

Shopper's task

(VoiceOver On)

Shopper performed the erase function under instructions.

We conducted short interviews with the helper and shopper separately to collect feedback.

Key findings from User Evaluation session:

01

Helper could not tell how and when to start and end voice recording.

Shopper did not have issues holding their phone throughout the trip or handing it to the helper for creating new tags

03

Helper was able to perform the task or record a new tag faster as they use it more.

Shopper was able to retrieve recorded information to distinguish items with similar packaging

02

04

We also conducted an Expert Evaluation session with another project team from our class. There was a free click-around session for participants to interact with GTag and guess its purposes. Then, we asked them to role-play as shoppers and helpers to perform the above tasks.

Prototype Improvement

I made improvements to the GTag prototype based on feedback collected from User and Expert Evaluations.

Onboarding pages for first-time users to familiarize the process of creating new tages

Wipe ---- Erase

Switch to more universally understandable names to avoid confusion

Future Improvement

- Program GTag to be compatible with VoiceOver(screen reader)

- Continue improving and testing GTag with visually impaired and sighted users

- Make GTag more customizable to users with different financial statuses, living situations, and technological needs

- Try and test new technologies for improving visually impaired people's grocery shopping experience

Prototype

This is a video demo for GTag (VoiceOver Version). It showcases the process of using the Write, Read, Erase, and Edit features of GTag.

GTag demo